well! i keep meaning to write detailed posts about all the cool stuff i’m learning, and not getting around to it. alas

for now, I took intro to deep learning this past week with my roommate. it was a really great time, i solidified and filled some gaps in. i typed up a few quick notes below, primarily from the two guest lectures which they won’t post slides for.

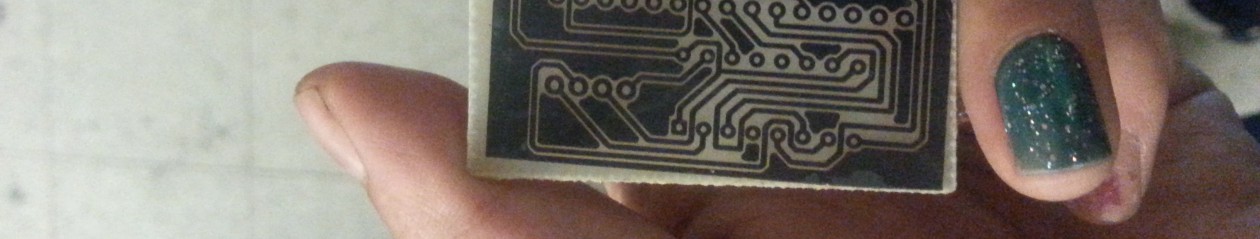

i also am taking the “advanced machining” class. https://www.youtube.com/watch?v=qkjA94URV3k

I am enjoying it so far.

mostly i enjoy attending classes and it’s motivating to take the class and discuss with someone I know. the atmosphere feels great to be in a class with lots of other smart nerds thinking about nerdy things. the outside world can be blissfully ignored. (see section: 2025 goals)

advanced machining

it feels really wholesome to hear from someone who

class #1 (i missed this and am watching the video now) seems quite happy now who struggled in undergrad (took 7 years) and also felt shame about their startup (not VC funded, machining in house) and some takeaways (over-engineered heh).

last wed. last wed (class #2) was a blitz on stress, strain, etc. and there was also been a video tour of two machinists who just started their own shop — cool to me since in China I saw many banks of machines but it’s not like I would ever know where to start in terms of talking to the machinists.

yesterday (class #3) the latter part was (I walked in late because, well, i forgot about the class, but also the T was delayed 15-20 mins T^T during which time i got to hear two high schoolers discuss extensively the latest dating gossip which was a whirlwind of people and events over presumably ~6 months haha)

intro to deep learning

http://introtodeeplearning.com/ The recordings will go up presumably in a week or so. The labs are public online and can be run for free on google colab (although make sure to not just leave the tab open — I think you have maybe 4 hrs/week). https://github.com/MITDeepLearning/introtodeeplearning/tree/master The colab links are in the top of each notebook. Pick either pytorch or tensorflow as desired. note: I found some of the syntax tricky, fortunately they have working solution notebooks provided also. the last lab I did sink in $5 (they didn’t allow for less) which technically can be reimbursed.

e.g. day 1: i thought batches were for parallel processing — they are also for make gradient updates more stable. the ada stands for adaptive in learning rates — adapting to how fast the gradient is changing, etc. methods.

day 2? 3?: i will skip over some of the CNN and RL, Q learning etc. stuff as that stuff I’m more familiar with. it was still good to ground my understanding but i had fewer “aha” moments. I could also see where an audience member got lost — filters do downsize (turn 2×2 into 1 pixel) so you might imagine it quarters the image resolution — but actually you slide a pixel over and repeat the convolution, so in the the image is only “downsized” by maybe a pixel on each edge or something.

day 2? 3?: VAEs/GANs: this was basically all new content to me. the general idea of moving from deterministic model outputs to probabilistic outputs (hence loss using KL divergence) was important to allow for model to flex outside of training samples. made the amorphous idea of modeling a “probability distribution” clearer: predict mu and sigma of a normal distribution.

day 2? 3?: diffusion model: i haven’t followed diffusion models at all. basically trained to recover data from noise. so take an image, make it noisy, then noisier, etc. all the way back to random noise. train model to recover image at each step. then have self-supervised model that can generate stuff.

day 4 pt 1: literally people are using .split() on llm inputs and outputs

intuition why put in prompt “you are an mit mathematician” produces better results: on average, people on the internet are bad at math. the LLM is a statistical engine. this simply biases the output toward the training data that includes (probably) better math

intution why chain of thought prompting aka just prepending “think through this step-by-step” helps: all ML is error driven, and shorter output means there’s relatively little “surface area” for a model to make or correct a mistake.

can get performance boost from training data but eventually will tank. takeaway: evaluation is really important

day 4 pt 2. people are serious about using LLMs as judges of other LLM output

the mixture of weights (A + B/2) started as a joke and is now used by every LLM company. in fact there’s crazy family trees of mixtures of the mixtures themselves

some parameters are pretty well known now (adamw, 3-5 epochs, flashattentinon2). learning rate usually 1e-6 to 1e-3. batch size 8 or 16 determined by how much VRAM needed (with an accumulated update, can have a different “effective” batch size).

post-training is the term for what people work on nowadays. we don’t bother e.g. re-tokenizing for a different language, but just fiddle with the weights after.

for instance, LoRA. have a separate adaptive matrix on top of the LLM and just modify those weights for your task.

train/test split closer to 1-2% of samples, not 80/20 like in traditional ML

example of finetuning: to create a finnish language model: train a model that is good in language but bad at overall tasks, and a model that is excellent but bad in target task, then merge.

evaluation: it doesn’t work well and we don’t really know what we’re doing, but it’s really really important! (XD)

A lot of evaluation is actually for finding holes in dataset and then fixing those / adding more samples.

future trends: test time compute is stuff like, at inference, ask for several solutions and take the most common answer (majority vote)

recommended libraries: for finetuning, TRL from hugging face, axolotl (user friendly on top of TRL – easy to share and “spy” on other configs haha), unsloth (single gpu).

for supervised fine tuning: usually overkill to fully train (very high VRAM use), LoRA – high VRAM but recommended, QLoRA not recommended due to performance degrades

Pre train: trillions of samples, post train: > 1M (eg general purpose chatbot), fine tune: 100k-1M domain specific(eg medical llm), 10k-100k task specific (eg spell checker)