i guess it has been on my mind a lot between working in the federal government in the Musk era and also having lots of friends in tech, but

if you eek 10% more out of each employee, running at 110%, that sounds amazing perhaps as a CEO

“hey look we’re such an elite, in-demand company, our company-wide policy is to fire the lowest 10% every year”

but what actually happens is that teams no longer have time to help other teams and become territorial (“file a ticket if you want help”). it’s way more efficient for someone with expertise to spend an hour or two helping out another team that’s stuck. but that’s not possible when everyone is asked for 110%.

it’s actually better from the CEO perspective to aim for 90% and bask in the flow of collaboration and productivity. (I say from the armchair)

but i’m starting to realize CEOs are also just people, maybe even somewhat risk-averse people, and thus may be prone to groupthink (see: the absurd proliferation of LLMs into every piece of software. literally found a “CEO playbook for generative AI” by IBM).

and in the “mandatory layoffs” situation (which apparently is policy at facebook now), people can actually start sabotaging other people. at one of the rallies, I met a lady who worked on internet infrastructure for 25 years. one of the reasons for retiring was over the decades when this sort of “corporate mindset” spread from GE (who started it) to her small company employer. (also apparently the % of women very noticeably decreased)

understaffing vs overstaffing

nowadays i think about organizational dysfunction a lot. and how understaffing can cause the same symptoms as overstaffing: people aren’t getting work done, not because of some colossal waste of money, but because people can’t get the things they need to do their job, because other teams are too short-staffed to help provision the needed resources, so people just make do until the other team can get around to it.

and the solution is actually more staffing in the appropriate places, rather than terrorizing people into magically producing more work.

side note: underwatering and overwatering plants can give similar symptoms too ! but wildly unrelated

other things on my mind:

being haunted by a fellow party-goer’s words,

“We could have had the first AIDS free generation. But not anymore.”

just knowing those deaths, and the dismantling of USAID, is washed away in all the chaos

“the first thing the world’s richest man did when he got unfettered access to power was take away money for the poor and medicine for the sick” (some internet quote)

and also in Signal gate, lost in the discussion is the fact that this (presumably bad person) was visiting his partner’s apartment complex and in bombing that building, 50+ other innocent people died for the bad fortune of living in the same apartment building. 💪 🇺🇸 ?

but overall, my feelings are still: top priorities for fixing what’s broken in our country is… axing medical research? seriously?

and my friend saying he absolutely recognizes the bullying behavior (re: trump targeting harvard) since that’s the way the nigerian president operates

it was nice to bike past harvard plaza today and see a clump of people with “northeastern supports harvard” today.

hattip to various people i’ve talked to over the past month(s) who i guess i always err on the side of undernaming but idk

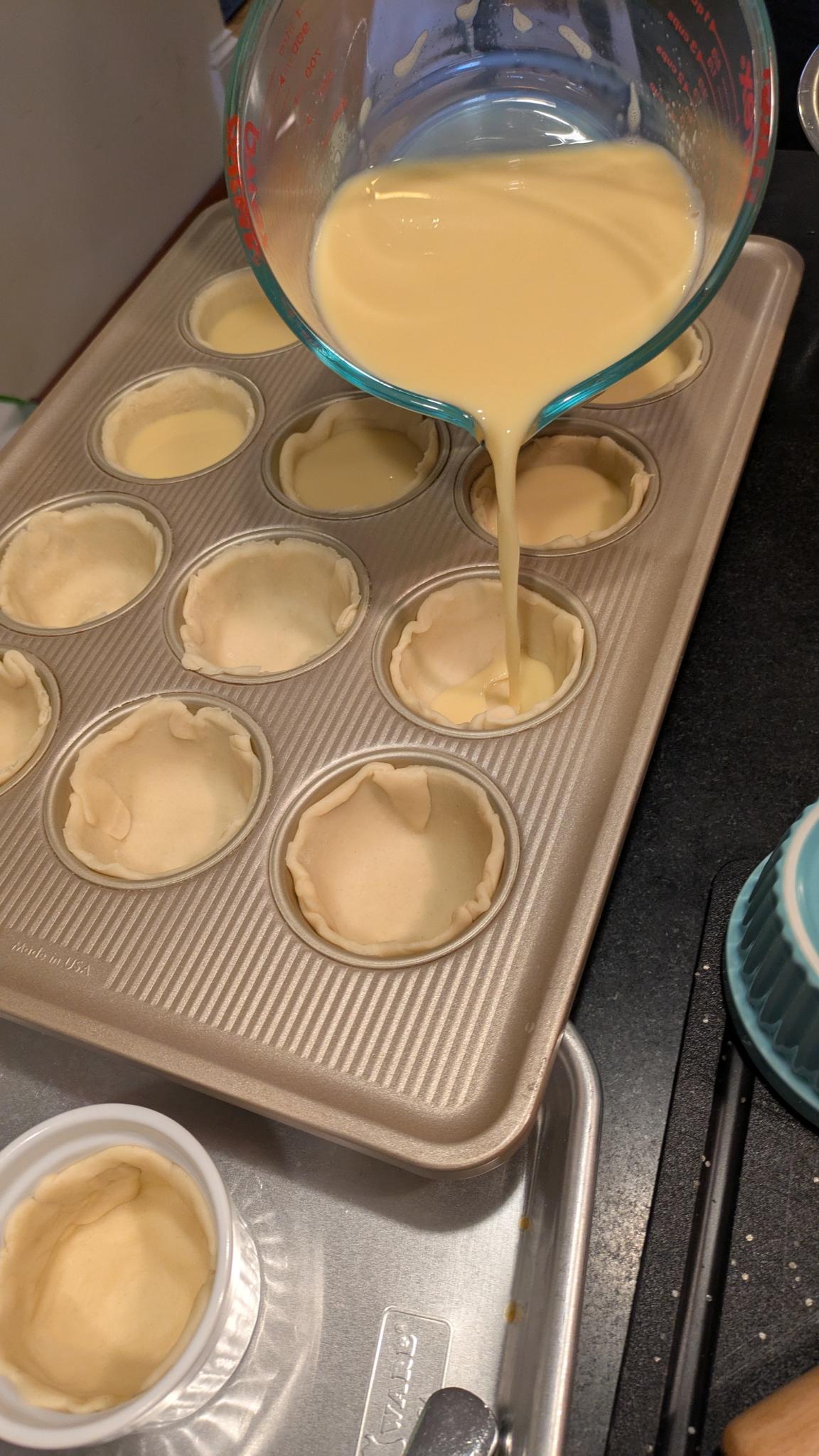

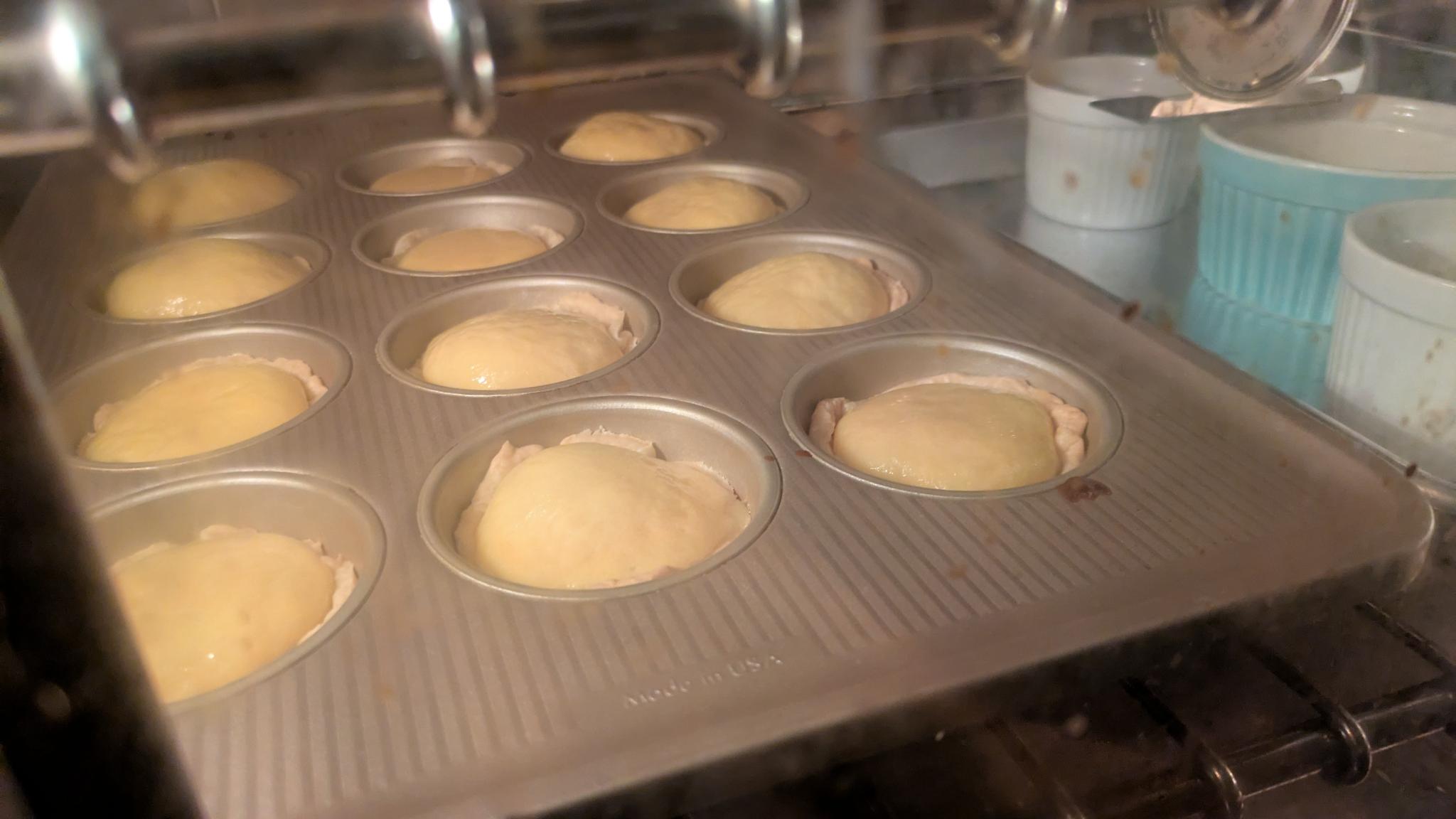

Pouring custard into the crusts

Pouring custard into the crusts The custards rise!

The custards rise!