blah blah background info

This past weekend I looked into how to create an avatar, like the chibi ones that people use for livestreams, per the following instructions:

Become a grass virtual Youtuber and stream yourself. Demonstrate that you can control the model. The stream should have a minimum length of 2 minutes.

https://puzzlefactory.place/puzzles/touch-grass-challenge-impossible

I did this as part of MIT mystery hunt 2023, specifically the scavenger hunt. I put way too much time into it in some sense, but also the point of mystery hunt is to make friends and have fun for me, and this scavenger hunt certainly helped a lot with this.

Instructions

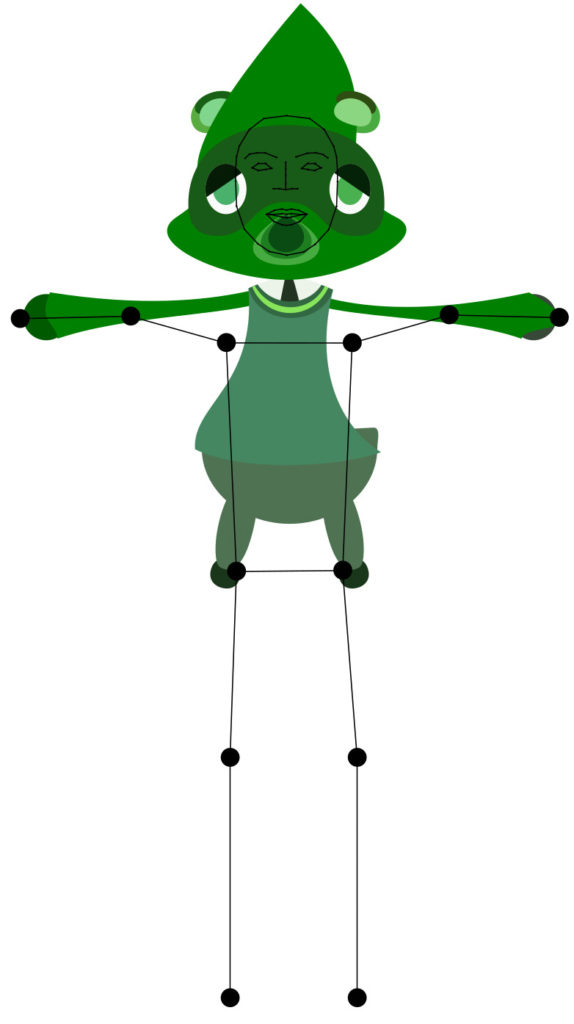

They have a great demo where you can actually just drag-and-drop a SVG in to change your avatar!

https://pose-animator-demo.firebaseapp.com/camera.html

And they provide several SVG examples here:

https://github.com/yemount/pose-animator/tree/master/resources/illustration

Unfortunately, whenever I ungrouped to edit the illustration, and then regrouped again in Inkscape, the file did not work. So all I could do was change the entire objects to the same color and call it a day.

Some details of inner workings

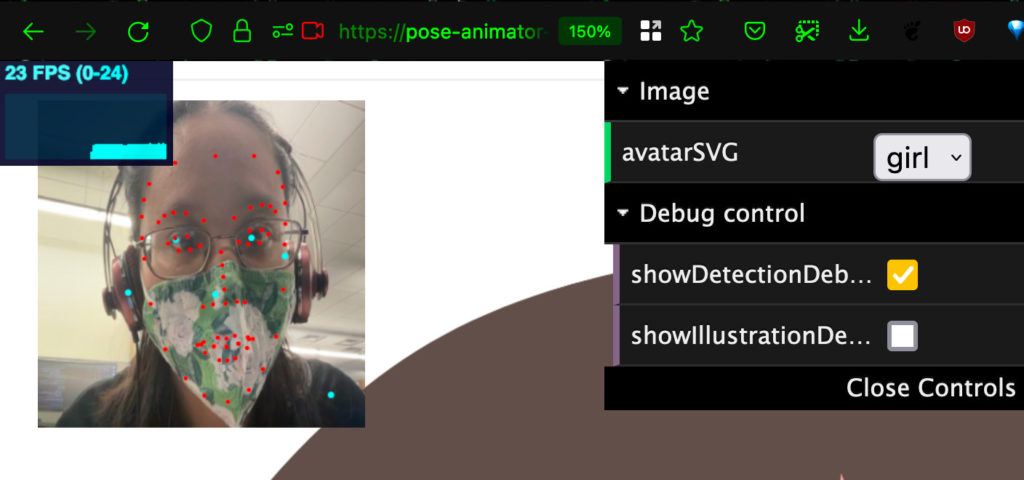

You can see the posenet tracking here:

And if you open the example SVG files, they look like this:

Further details

I haven’t run it, but the code is open-source and available here:

https://github.com/yemount/pose-animator/

I think shared my screen with OBS Studio — I just did screen share of my entire desktop, (which sort of defeats the purpose of a virtual avatar…) but obviously you can crop and resize with obs studio so that you are not in the image.

Or have more time than 30 minutes and actually install and run the software… 🙂 I’ll update if I ever do that. Or if I ever figure out how to edit the file in inkscape to create a truly custom avatar…