2025 goals

re: blissfully ignoring the outside world, that is my goal for 2025: be a rich d*bag. something about earning lots of money and donating it. turn a blind eye to … misery … or something …

… okay i don’t think i could go so far as to work on promoting tobacco but there’s probably stuff in between like “study illicit massage parlor industry and be depressed about humanity” and “figure out how to circumvent climate change regulations to expand oil and gas drilling”. otherwise i don’t think i can be useful in 2025-2029. thanks sexism. on the other hand still super proud i got to vote for two different women presidential candidates in my life time !!!! one day it will happen. even if i have to do it myself heh.

running

i guess that will be a goal. first though, finish my app, “have you run as much as my hamster”.com. my hamster ursula probably runs 1-3 miles every night heh

anger as an identity

I think a lot my identity was built around anger. it made me angry as a kid to travel and see someone younger than me, missing limbs, in rags traveling around on essentially a furniture moving dolly begging for money. meanwhile i had flown across the world.

Why would the world be so cruel and unjust? it really made me mad. this anger drove me past any insecurity and anxiety and self-hatred to keep going. i didn’t believe i could get into MIT, but i applied in part because yes, i wanted to change the world.

I didn’t believe i could get into grad school, but i applied because — okay actually i just applied because i’d be paid the same but actually get health insurance. it didn’t have much to do with anger lol

Anyway, anger in various forms has driven me through life. In some sense, anger is part of my identity, and I’m afraid to let go of it. I fear that if I stop being angry, I’ll stop trying to change the world.

But having anger at the world as a part of my identity, makes coming to terms with my inability to change it rather painful. Or makes it harder to see the small bits I do change and the change that happens over time.

I want to give myself permissions to be happy, to be confident that I won’t ever stop trying to change the world.

My wild implementation plan is to go to the opposite extreme and focus on being a rich douchebag and/or having tech bro optimism (that tech will fix the world), idk lol it’ll be a fun year

reflections on undergrad me: pep talk needed

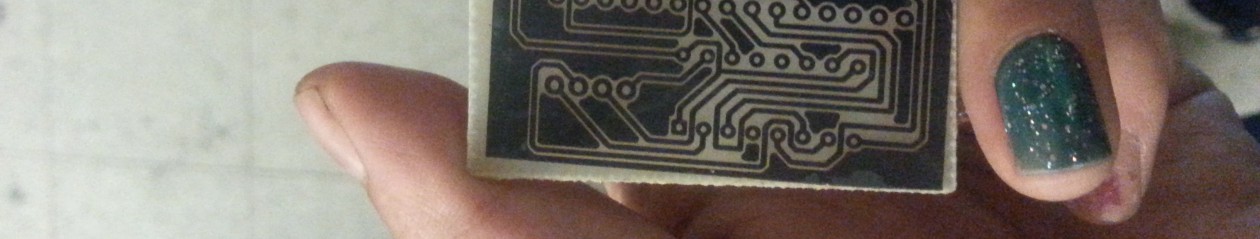

i rewatched my hexapods video https://www.youtube.com/watch?app=desktop&v=qTh-OGA_LeM

(context: for the 2.007 class which is a robot competition, i elected to go do my own thing and build a hexapod, because … idk i wanted a dancing hexapod)

and WOW i can hear all the lack of confidence and downplaying and a little bit of the misery (that’s probably more my own memory though) in the (rather mumbled) voiceover. and reading my old instructables. i actually did a ton. it’s not as much as i wanted but it’s still a ton. it helps me hear it in my own voice right now.

when i watch a video of 2025 me in ten years, i want to come away with a sense of this person is super competent, confident, articulate, and rightfully proud of their own achievements and technical skills