In short:

Find the inkscape source, and an example page (in PDF) you can print, at github.

github.com/nouyang: Dot Grid Paper

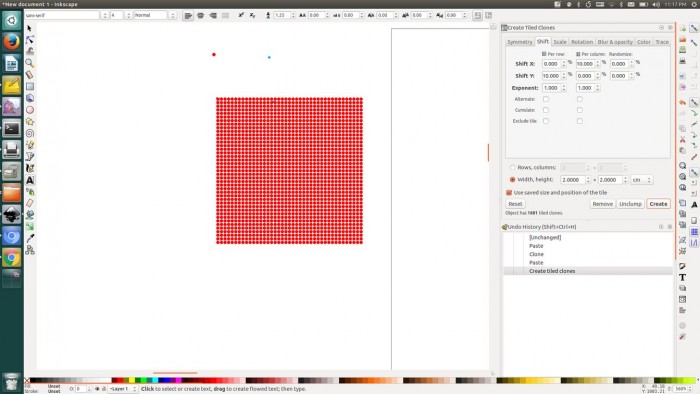

preview

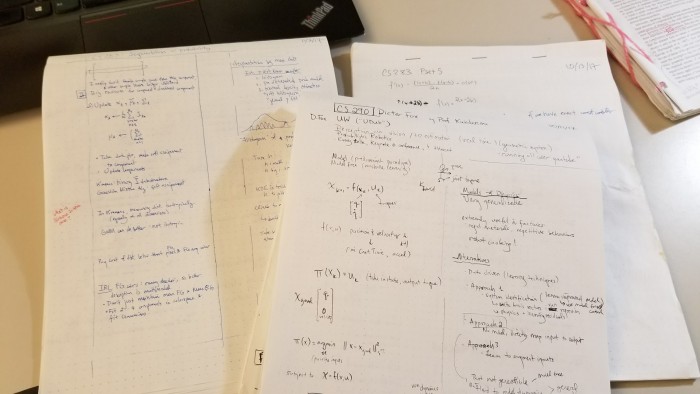

example notebooks

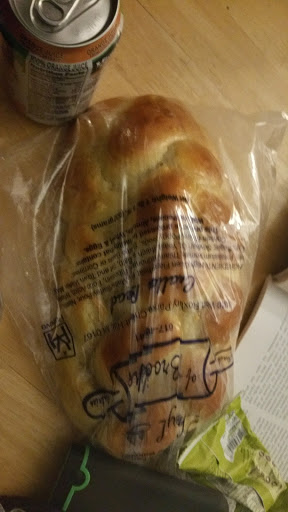

just stapling ~15 pages together at the top. the issue I found is not being able to tell which way the notebook flipped, and it being hard to flip through pages fast. Also, at 15 pages, I started accumulating “notebooks” really fast, and it wasn’t always very obvious the chronological order. The idea was to detach them, three hole punch, and stick them into a binder. I haven’t gotten around to that yet, and probably won’t ever since the semester is almost over…

using manila folders I found lying around, and a somewhat-industrial stapler across ~25 pages. i haven’t used this enough to think about pros and cons.

the overall con, though, is that now my notes are spread across five different notebooks…

in detail

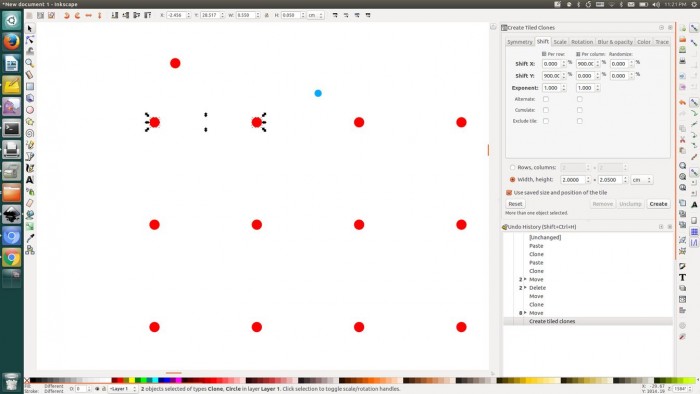

i reformatted to properly use clones and the clone tile factory in inkscape; and additionally, I decided on a different formatting for the “darker” lines (before, they evenly divided the page into 4 sections vertically).

Using the clone factory I thus just have two sizes of dots: small and large. And I can change opacity, color, size, stroke, etc. for *all* dots just by choosing editing those two “source” dots. If you’re having trouble finding them, just click on any dot and “shift+d” to find the source dot.

- http://www.linuxformat.com/wiki/index.php/Inkscape_-_cloning_and_tiling

- http://tavmjong.free.fr/INKSCAPE/MANUAL/html/Tiles.html

- http://tavmjong.free.fr/INKSCAPE/MANUAL/html/Clones.html

double-sided

pdftk tiled_clones_30.pdf tiled_clones_30.pdf output merged.pdf

printing notes

Print at high resolution (600×600 or greater), and the only b&w printers tend to have aliasing effects — and old toner cartridges tend to produce blemishes across the pages. Print with “no scale / no shrink to fit”.

example notebooks

just stapling ~15 pages together at the top. the issue I found is not being able to tell which way the notebook flipped, and it being hard to flip through pages fast. Also, at 15 pages, I started accumulating “notebooks” really fast, and it wasn’t always very obvious the chronological order. The idea was to detach them, three hole punch, and stick them into a binder. I haven’t gotten around to that yet, and probably won’t ever since the semester is almost over…

using manila folders I found lying around, and a somewhat-industrial stapler across ~25 pages. i haven’t used this enough to think about pros and cons.

the overall con, though, is that now my notes are spread across five different notebooks…