THIS POST IS A WORK IN PROGRESS.

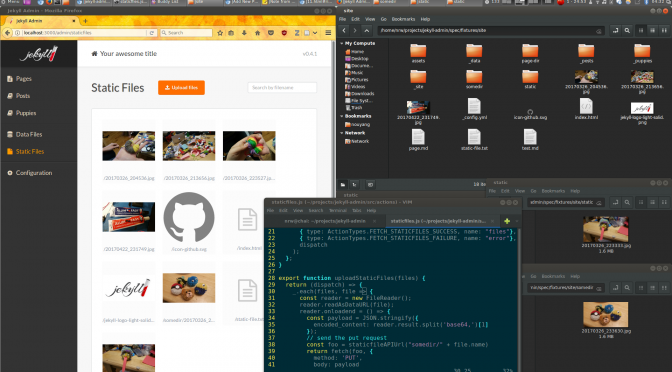

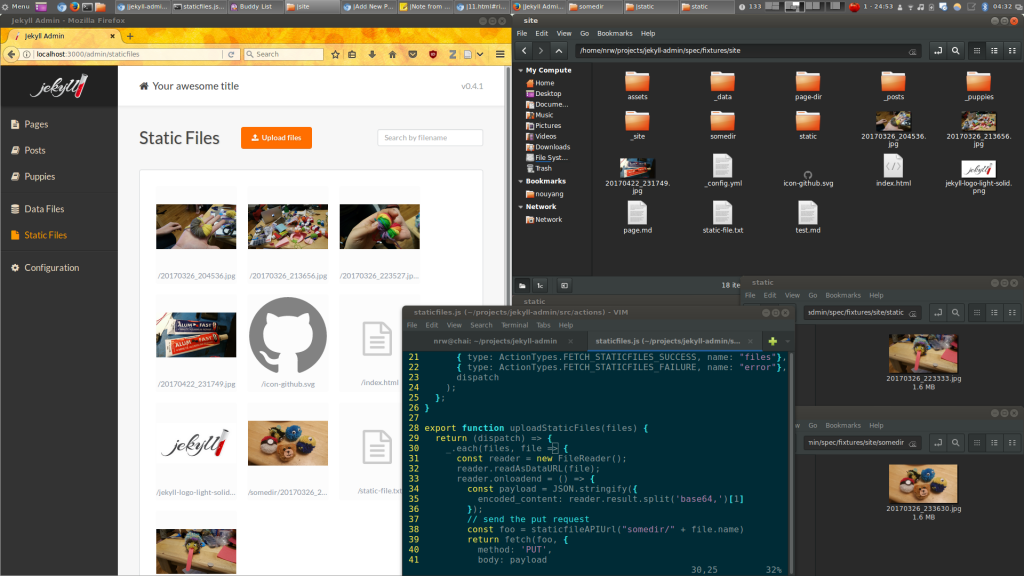

I was able to get static file upload to a different directory. directory is automatically created by jekyll admin. Thumbnail even populates in http://localhost:3000/admin/staticfiles !

First steps for addressing: https://github.com/jekyll/jekyll-admin/issues/201

Feature request: Specify folder to upload static files to #201

Next steps: make a little GUI button for toggling “assets” or something like that (not sure how to make it not an arbitrary folder, but still allow user input into it)

https://github.com/jekyll/jekyll-admin/blob/3463b7ed98d43e721389ade9470c06dd863a5b9e/src/actions/staticfiles.js

const foo = "static/" + staticfileAPIUrl(file.name);

return fetch(foo, {

method: 'PUT',

body: payload

})

==================================== INSTALL ======================

my distribution had ruby installed already.

sudo apt install ruby-dev

git clone https://github.com/jekyll/jekyll-admin && cd jekyll-admin

sudo gem update –system

Gem::Ext::BuildError: ERROR: Failed to build gem native extension.

An error occurred while installing rainbow (2.2.2), and Bundler cannot continue.

sudo gem install rake

https://github.com/jekyll/jekyll-admin/issues/201

Bundle complete! 9 Gemfile dependencies, 46 gems now installed.

Use `bundle show [gemname]` to see where a bundled gem is installed.

script/bootstrap: line 7: npm: command not found

sudo apt install npm

nrw@chai:~/projects/jekyll-admin$ script/server-frontend

sh: 1: npm-run-all: not found

npm install npm-run-all

nrw@chai:~/projects/jekyll-admin$ script/server-frontend

/usr/bin/env: ‘node’: No such file or directory

sudo apt install nodejs –> already installed’

https://github.com/jekyll/jekyll-admin/search?utf8=%E2%9C%93&q=upload&type=

curl -sL https://deb.nodesource.com/setup_6.x | sudo -E bash -

sudo apt-get install -y nodejs

sh: 1: babel-node: not found

nrw@chai:~/projects/jekyll-admin$ npm-run-all clean lint build:*

npm-run-all: command not found

https://askubuntu.com/questions/603921/cant-use-npm-installed-packages-from-command-linen

https://github.com/jekyll/jekyll-admin/issues/201https://www.npmjs.com/package/npm-run-all

https://www.npmjs.com/package/babel-node

nrw@chai:~/projects/jekyll-admin$ npm install babel-node

> babel-node@6.5.3 postinstall /home/nrw/projects/jekyll-admin/node_modules/babel-node

> node message.js; sleep 10; exit 1;

┌─────────────────────────────────────────────────────────────────────────────┐

| Hello there undefined 😛 │

| You tried to install babel-node. This is not babel-node 🚫 │

| You should npm install -g babel-cli instead 💁 . │

| I took this module to prevent somebody from pushing malicious code. 🕵 │

| Be careful out there, undefined! 👍 │

└─────────────────────────────────────────────────────────────────────────────┘

^C

npm install babel-cli

npm ERR! Failed at the jekyll-admin@0.4.1 start-message script ‘babel-node tools/startMessage.js’.

npm ERR! Make sure you have the latest version of node.js and npm installed.

npm ERR! If you do, this is most likely a problem with the jekyll-admin package,

npm ERR! not with npm itself.

nodejs –version

npm –version

https://www.npmjs.com/package/babel-cli

npm update npm -g

nrw@chai:~/projects/jekyll-admin$ script/server-frontend

> jekyll-admin@0.4.1 remove-dist /home/nrw/projects/jekyll-admin

> rimraf ./lib/jekyll-admin/public

/home/nrw/projects/jekyll-admin/node_modules/babel-core/lib/transformation/file/options/option-manager.js:328

throw e;

^

Error: Couldn’t find preset “stage-0” relative to directory “/home/nrw/projects/jekyll-admin”

nrw@chai:~/projects/jekyll-admin$ npm install babel-preset-stage-0

/home/nrw/projects/jekyll-admin/node_modules/babel-core/lib/transformation/file/options/option-manager.js:328

throw e;

^

Error: Couldn’t find preset “react” relative to directory “/home/nrw/projects/jekyll-admin”

/home/nrw/projects/jekyll-admin/node_modules/babel-core/lib/transformation/file/options/option-manager.js:328

throw e;

^

Error: Couldn’t find preset “react” relative to directory “/home/nrw/projects/jekyll-admin”

npm install react

$ script/boostrap

script/server-frontend

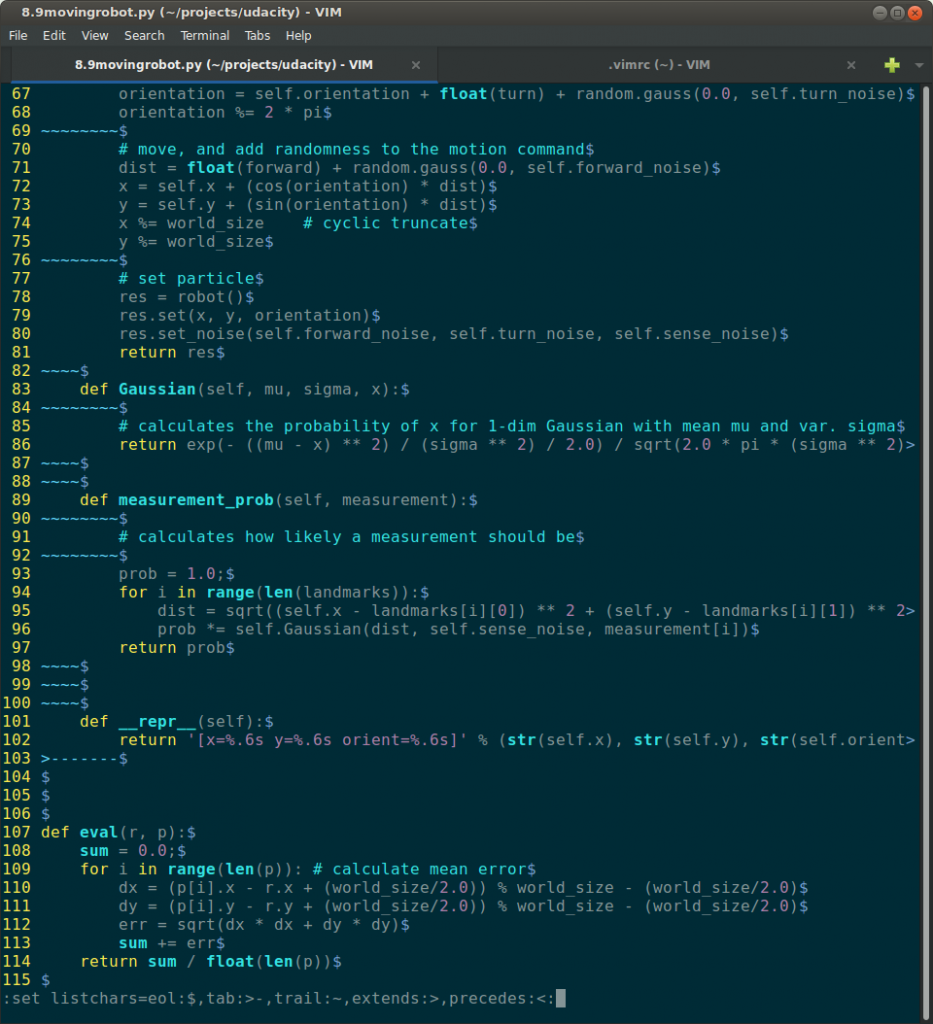

==================================== static file URL ======================

https://github.com/jekyll/jekyll-admin/search?p=2&q=upload&type=&utf8=%E2%9C%93

https://github.com/jekyll/jekyll-admin/blob/3463b7ed98d43e721389ade9470c06dd863a5b9e/src/actions/staticfiles.js

https://github.com/jekyll/jekyll-admin/search?utf8=%E2%9C%93&q=staticfileAPIUrl&type=

// send the put request

return fetch(staticfileAPIUrl(file.name), { method: ‘PUT’, body: payload })

https://github.com/jekyll/jekyll-admin/blob/2e31c09a265868d5f29b8bce407041da70a7261b/src/utils/fetch.js

https://github.com/jekyll/jekyll-admin/blob/3463b7ed98d43e721389ade9470c06dd863a5b9e/src/constants/api.js

http://stackoverflow.com/questions/377768/string-concatenation-in-ruby#377787

https://github.com/jekyll/jekyll-admin/blob/3463b7ed98d43e721389ade9470c06dd863a5b9e/src/constants/api.js

vi staticfiles.js