(i’ll sketch out this post and update it as i find the time) — actually will just post in two parts jeez how can i write this much — so —

part 1

i caught covid holy f*k

…or so i thought

amusingly i am working through 6.041 on mit ocw which is the undergrad probability class, and like a perfect complement to the lectures I got a real life lesson on conditional probability

(wrote a section on covid testing terms if you’re unfamiliar)

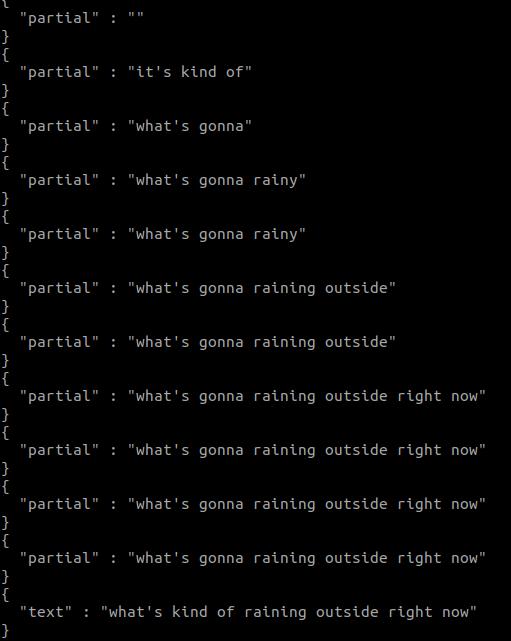

testing positive

flew home to GA for new years, omciron had already picked up quite a bit and so I wore a proper N95 (i actually didn’t have a KN95 / N95 for the malta international trip) and returned to superstitious practices, in this case a new one, mouthwash. still not eating on planes

got a quickvue test via kaiser permanente drive-through testing appointment that had to turn people away. tested negative on 1/1 (three days after my flight)

had indoor dining in georgia 1/1 (begrudgingly) and freaked myself tf out. had 5-6 ft spacing, 20% capacity (in a place that seats 200 so v. spacious), my table was 5 people, all double and most triple-vaxxed. (i am not sure why it’s more impolite to turn down indoor dining than invite someone in the first place without considering their safety budget…)

dim sum was v. tasty though

i think because of my grudge about being coerced to go (and also… the inviter mentioning having caught covid and having eaten indoors 3-4 times before and not feeling the need to get a booster right away), i was convinced i’d catch omicron because of the dining, like it just felt karma was waiting to get to me after i did my trip to malta and somehow didn’t get COVID (to my parents’ credit they did try to change it to outdoor dining)

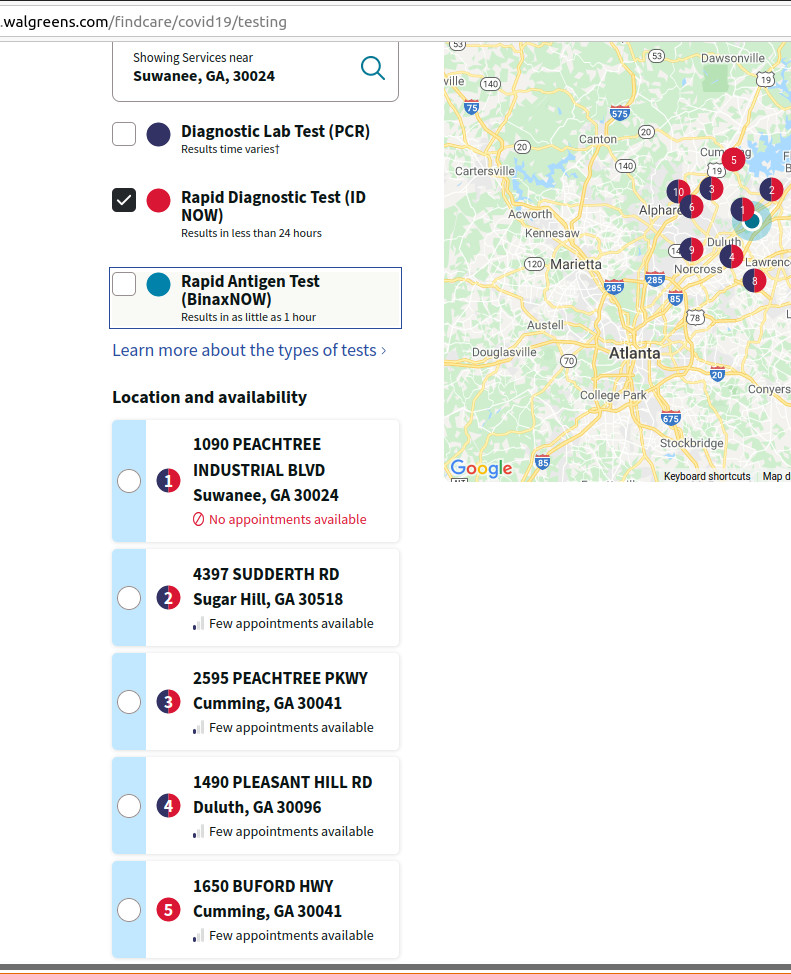

i had trouble getting a test in GA (only checked CVS though), and the PCR tents were closed for the holidays, and eventually 4 days passed without anyone having symptoms, so i just flew to boston and planned to quarantine for 5 days and get tested on days 3 and day 5 as before, and also tried to source a day 0 test since it was 5 days out from dining.

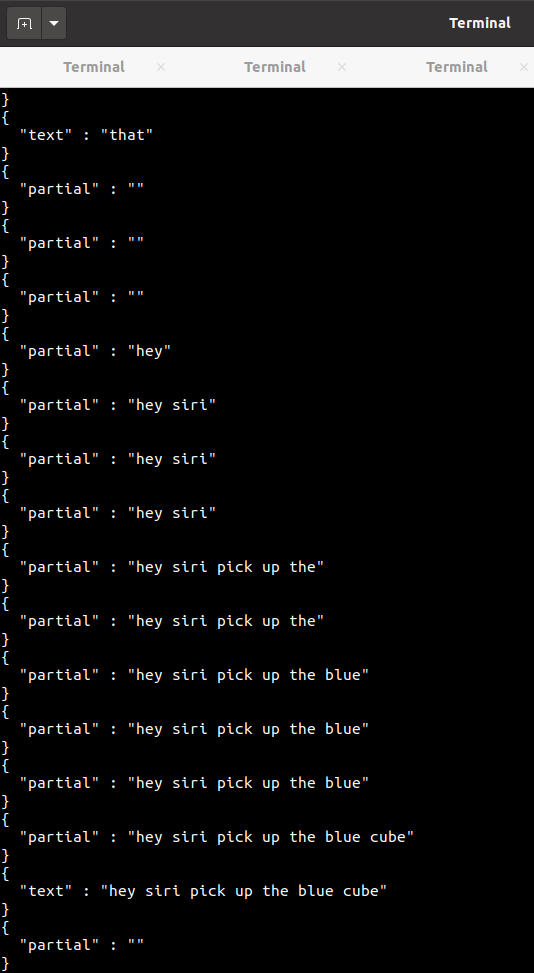

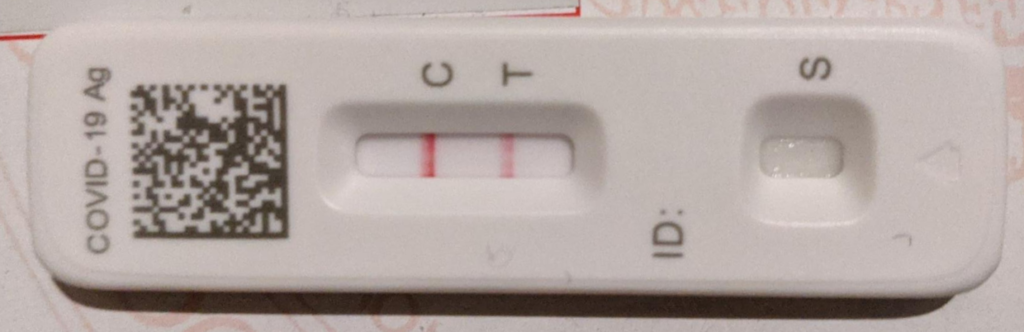

roomie also had a sore throat but hadn’t been able to get tested (waiting in rain for half an hour outside a clinic), so great, i could get tests for both of us. i called walgreens close to target (where i was going to curbside pick up some cough drops for him, since i wasn’t sure about spreading airport germs). they had in stock and i picked up four flowflexes with no issue. roommate’s came back negative!

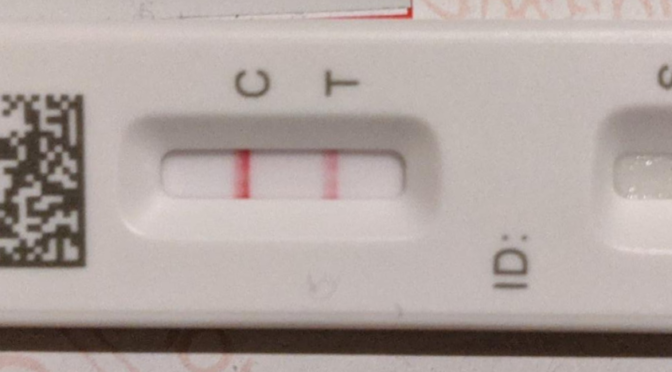

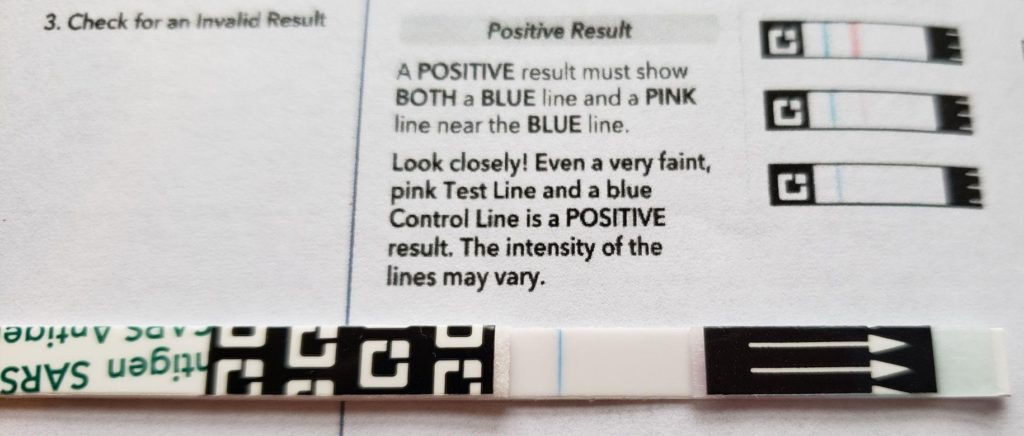

…mine came back positive, wtf??

i felt perfectly fine (zero symptoms) but from what i understood the test was very accurate (cue conditional probability lesson i’ll explain later), like i kept hearing false positive rate of 0.05% so i thought the likelihood i had covid, given a positive antigen and the amount of risk i’d taken over the past week (mostly the indoor restaurant dining and two flights), to be pretty high

i actually thought that, since antigen tests were more likely to give false negatives than false positives, that getting a positive despite having no symptoms meant it was extra likely i had (asymptomatic) covid, even more so than if i had symptoms and had a positive antigen– turns out it’s the exact opposite!

isolation

i freaked the fk out and shut myself in my room right away and asked everyone to mask up around me, and i avoided leaving my room and just went to sleep.

took a second test the next day to convince myself before i really committed to the 10 days of isolation and trying to not spread it to my roomies, and all my roomies had to deal with being a close contact also

also positive, fk me, at this point i started notifying everyone i’d interacted with as i was certain i had covid (certain enough i wasn’t even going to figure out harvard pcr test for confirmation)

which, aside, still have no idea how to notify airlines – i think that comes through dr and department of health? –

presumably you contact a dr and they deal with everything – now i know i guess you can call insurance hotline ! and they will give you to talk to a nurse remotely (nurse hotline) same day

ok i have some trust issues with insurance and the health system, exacerbated by covid-caused instability in living situation, but anyway

=== notes ===

if you haven’t had to do a lot of home testing for covid (e.g. if you are home all the time or get tested regularly for school), here are some terms for you

- Antigen test – there are the rapid tests (results in ~15 mins) that are available in drug stores (Walgreens, CVS) for ~$10 to $15

- QuickVue, BinaxNow, and Flowflex are the ones I’ve seen in the United States

- PCR test – this almost always refers to RT-PCR, reverse-transcriptase polymerase chain reaction. these are the tests that need to be sent to a lab and take 1-3 days for results

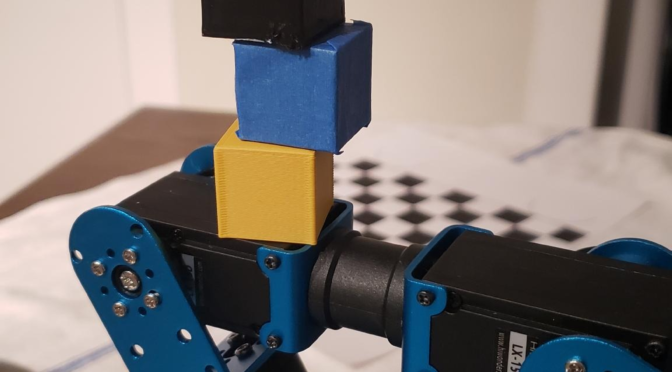

- Rarer, there’s the isothermal PCR tests, which include Cue tests that Google hands out to its employees, where you take self-sample like the antigen tests but it goes into a cartridge that runs PCR and reports results after 20-30 mins. these are a little more accurate (prolly) than the antigen tests

- (Traditional PCR goes through multiple heat-cooling cycles, so I imagine the isothermal part is what saves the time)

- Both these are Nucleic Acid Amplication Tests (NAATs), for more see CDC website. The layman’s explanation I came up with for traditional PCR (maybe similar for isothermal PCR?) is you have a search keyword (a primer). if the keyword (nucleic acid) is found, the heat-cooling cycle makes a bunch of copies of it (amplication), which you can then detect more easily (test)

- Anterior nasal swab – the PCR and Antigen tests can both use this method which is easy to perform by yourself at home. it’s where you shove a qtip or other stick maybe an inch into your nose and kinda rub it in circles and then (using same stick) do the same for other nostril, which i would’ve found so grosss but now it’s normalized

- (other sampled areas include back of throat, or way up in the back of the nose which was what people complained about at the beginning of the pandemic ALMOST TWO YEARS AGO).

- Color kit – both MIT and Harvard use these for PCR testing. you self-sample and return to contactless drop off in collection bins around campus. each kit has a barcode which you link to your harvard/mit id online, and results are reported on the color website

COVID facts

- Original strain: 5-9 days to infectious/symptoms

- Delta: 3-5 days to infections/symptoms

- Omicron: 1-3 days to infections/symptoms

- Generally can be infectious two days before any symptoms, when you feel healthy!

- Asymptomatic = no symptoms, indistinguishable initially from pre-symptomatic

- Isolation: stay inside your room, you’re infectious

- Quarantine: stay inside your house just in case

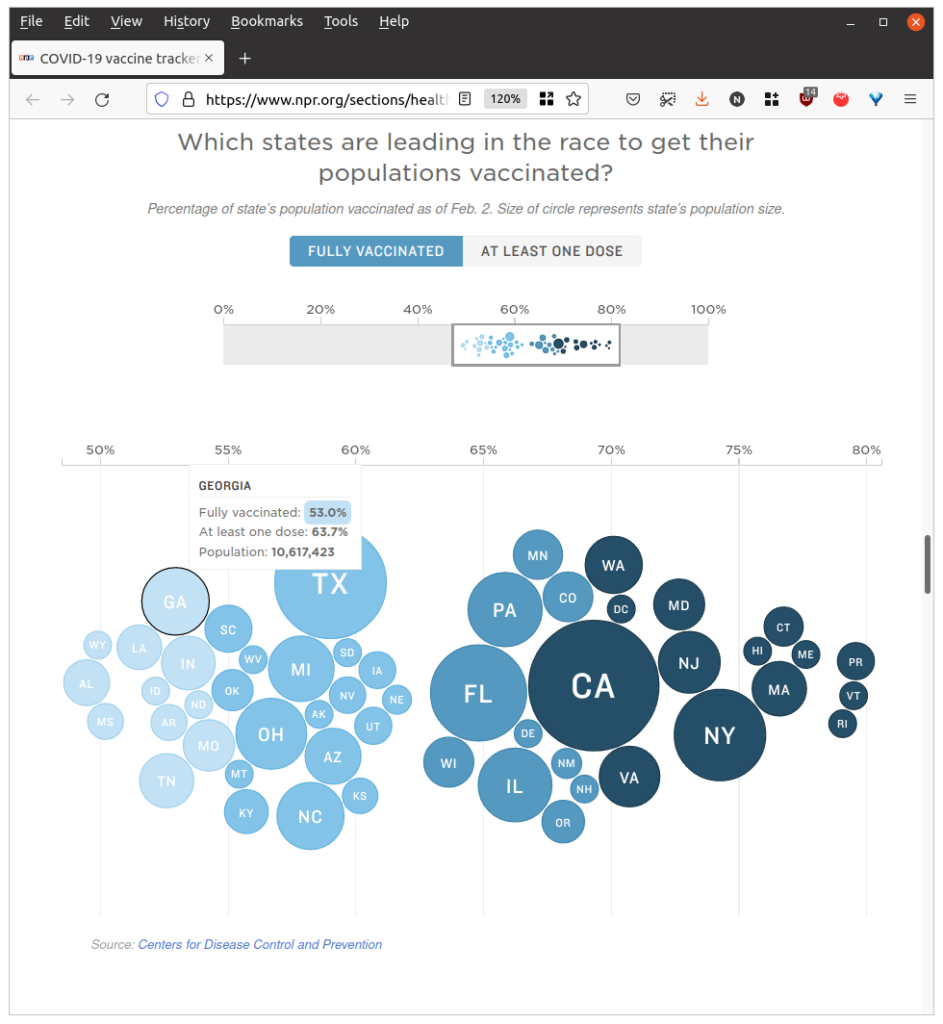

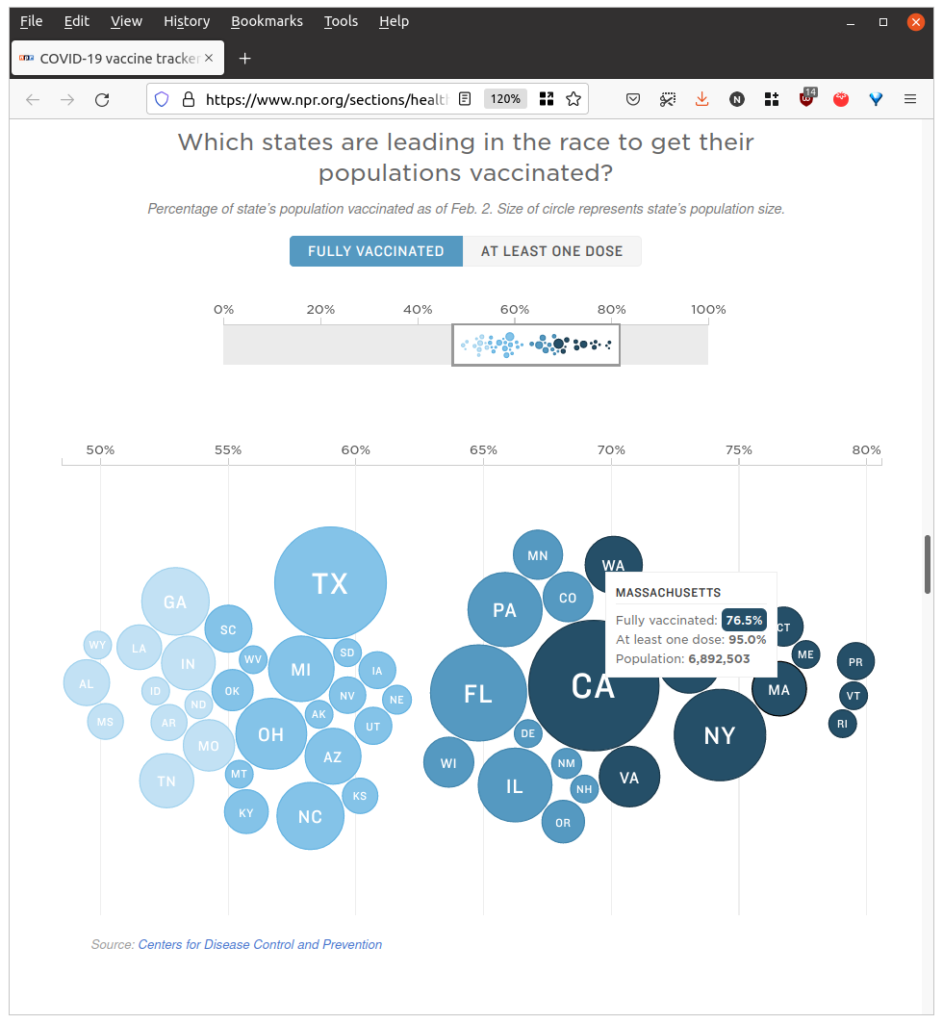

- Generally now considered that 2-dose vaccination still very effective for preventing severe illness

- – so-called “break-through infections”

- Booster shots now recommended if you’re 6+ months out

- United States topped 1 million cases a day thanks to omicron

- In the US, more people died in 2021 than 2020 from COVID

- In MA, hospitalizations exceed the peak of last year’s winter surge

For a while it really seemed like Delta might be the last wave, the numbers didn’t go up nearly as much as last year even after all the travelling for thanksgiving. And for a while it was unclear if omicron would actually behave very differently if a large portion of the population was vaccinated. but it sure did !! booster shots have become mandatory for returning to school for Harvard and MIT