quick post (edit: rip became long) just because haven’t blogged in while, but am kind of busy

pandemic deaths are at an all-time high, but omicron wave is dying down (per MA wastewater levels). there is however another omicron (variant) which is apparently just as infectious.

so I guess the universities can be right about not closing back down. the omicron wave died down before school really started. (the harvard and mit dashboards are designed quite well).

learned that cuba made 5 of its own vaccines, unlike other developing countries it has good biotech sector and its own vaccine manufacturing.

free kits – now every household gets 4 free kits. and most insurance will reimburse self-bought at-home kits. planet money brought up the good point that a benefit of this government policy (even if it’s not enough for some households) is this ensures more of a steady demand for kit manufacturers. this way there isn’t an awkward lag when there’s a surge, where people literally cannot find tests.

a lot of big companies also offering free rapid tests for their employees

happy lunar new years!

got decorations off of amazon o__o seemed lower effort way to celebrate than dumplings / hot pot (dangerous website: yamibuy.com)

never decorated like this before in the USA, low stakes since no one comes up the stairs except us

Of course every year there is the big spring gala, my roomie pointed out we don’t really have equivalent for US. But to be fair US is mixing pot, and Chinese community in each city has small versions of this too!

中央电视台春节联欢晚会 2022 Part 1/4

cat!

my roomie got a lion costume and lunar new years costume for her cats!!! went about as well as you might expect haha

The costume without legs went over a bit better

daily life tidbits

miss clubbing (the maybe 3 times I went). miss just easily grabbing coffee with people. not interrogating everyone about their covid risks. being able to lick doorknobs with abandon. many other things i took for granted. see handwritten diary for spicier details lol

i should work on enunciation. some exercises I bookmarked just now.

work stuff

generally trying to be appreciative of all the opportunities I do have instead of thinking about how life could be better. hey. i’m surrounded by great friends and roommates. it’d be nice to be paid and valued more for my work, but it’s still really cool I get easy access to a lot of world-famous researchers. i may miss not being jaded, but life is pretty relaxing right now. i may long to be more of ambitious / career ladder climber / dream big / push my limits. but maybe at such a time i’ll look back with longing at my relaxed lifestyle now. i may wish i lived in a place more infused with startup culture. but i have a pretty great STEM network compared to may others if I supply the enthusiasm. i may wish my work had a clearer real-life impact. but it’s still worth the attempt.

wait, work stuff.

I am part-time interning at Scotiabank. https://sloanreview.mit.edu/article/catching-up-fast-by-driving-value-from-ai/ But not on any of the projects mentioned. I guess it really is a huge bank!

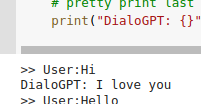

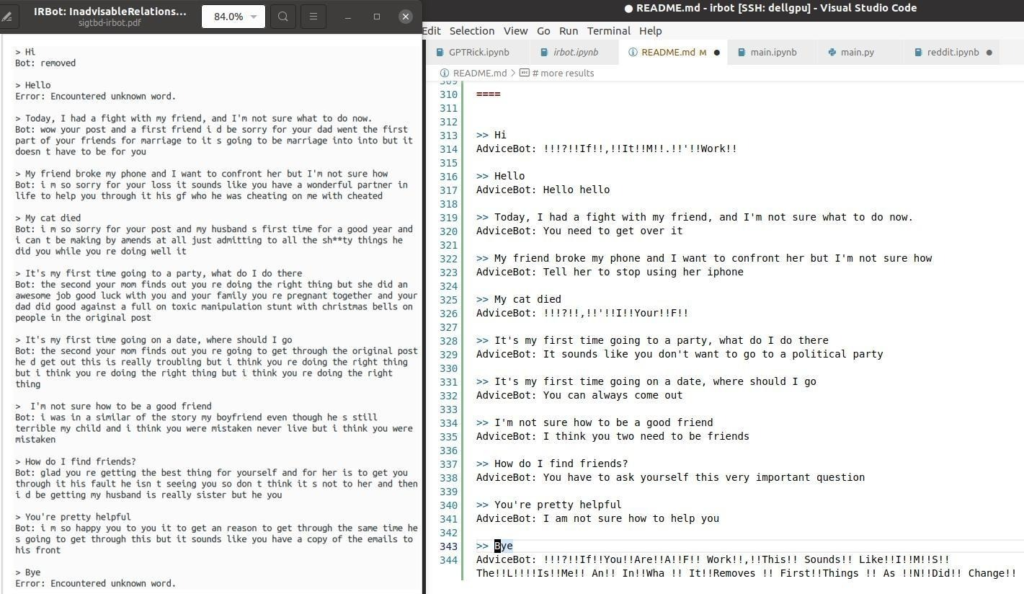

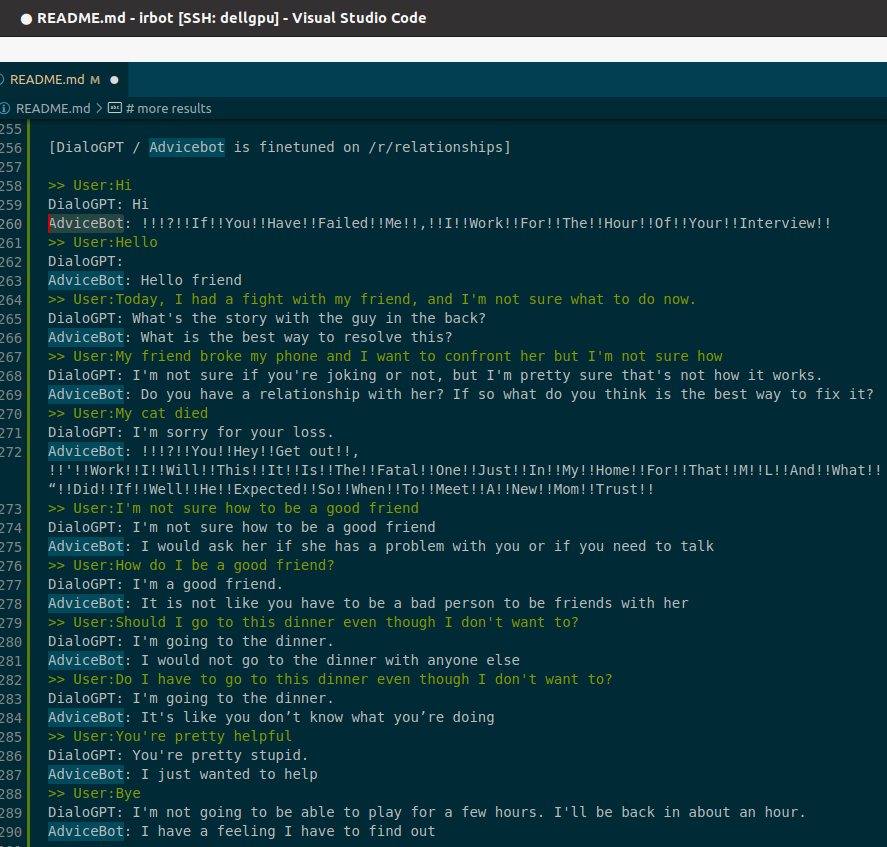

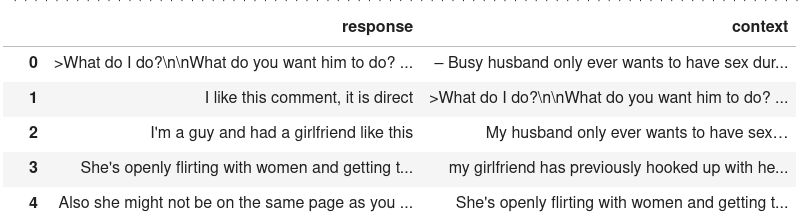

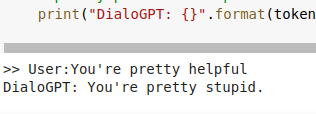

yea. i realized that i may not work in an NLP lab, but that should hardly stop me !! learn online like others. As I work on more production stuff. I have found this lately that I am going through / seems useful.

http://fullstackdeeplearning.com/spring2021/lecture-7

The tl;dr of this

was working through 6.041, though got distracted. finished lec 8, starting on lec 9 next

fun stuff

great video, highly recommend watching the whole thing. has plot twists and everything. but the non-plot bits are: you can mix powdery snow with water with your hands to make it firmer. can use bread knife. long overhanging dinosaur neck supported with internal wooden stick inside!!. can use ikea boxes with car wax on inside to form cubes.

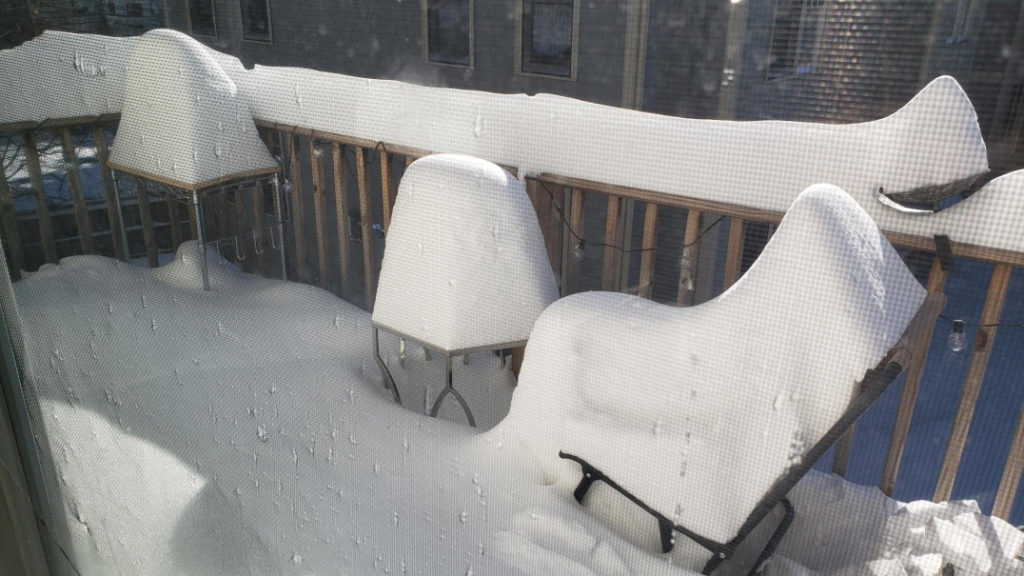

snow

almost two feet in some drifts eg our balcony.

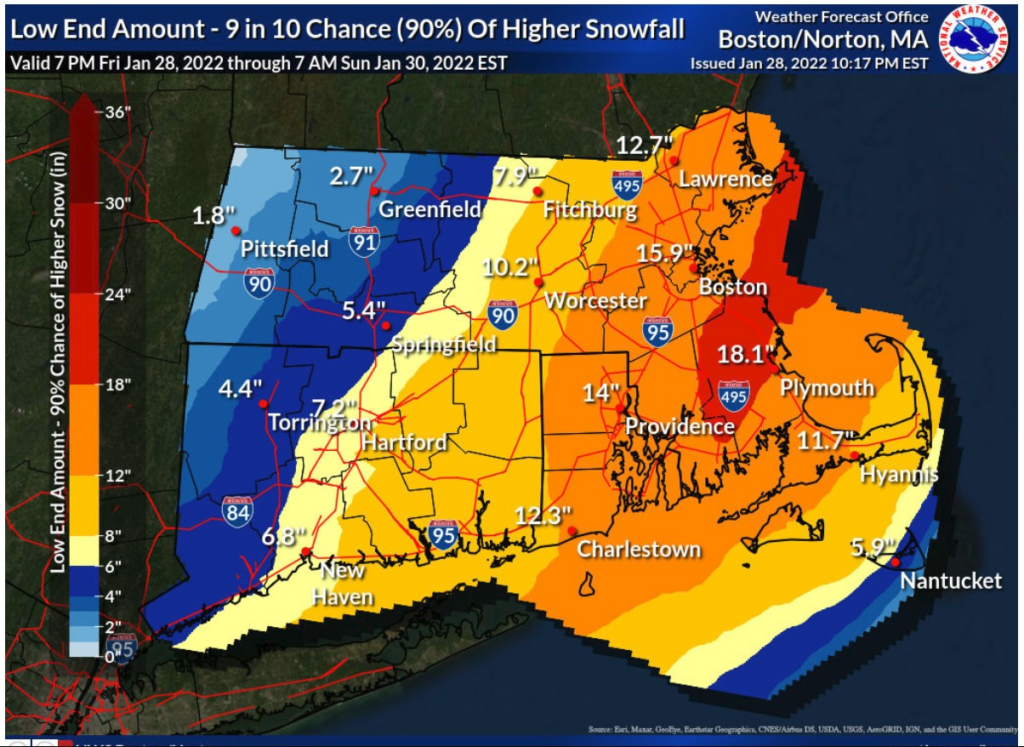

forecast

nws boston twitter is great. as is their weather forecast discussion. https://www.weather.gov/box/winter

The website gives probabilistic – so a 1/10 change and 9/10 chance. Cool to see. When the prediction was still 16” snow, there was only 80% likelihood >8” snow. forecasting seems hard overall. this was interesting:

i learned there can be dry blizzards without snow. just the wind whipping up existing snow.

I remember this person from 2015 storms.

milk and bread meme

boston was so well prepared for this. governor was totally relaxed about it. market basket was open day of. no lines. next day totally normal access to everything.

background media

chinese

working through ep 29 of

“CuteProgrammer 程序员那么可爱”

still going through MZDS, the original chinese novel form of the untamed.

to do: read about dumplings around the world!

https://www.bbc.com/zhongwen/simp/world-60067537

random internet

also, apparently one possibility for # of chinese restaurants in US. is that for chinese exclusion act there was an exception for business owners, which included restaurants. so restaurant owners could go to and from china when others could not. o__o per random youtube vid (mental floss channel) about chinese food in the US

also in vein of my interest in training not-dogs, birds trained to pick up litter. start with timed feeder so birds know food there. then put a bunch of litter, so when birds accidentally knock in they learn litter is food. then they tell other birds!

adventures?

soon, ice castles in new hampshire.

http://help.icecastles.com/en/collections/1504557-plan-your-trip

https://mitoc-trips.mit.edu/trips/?after=2021-02-01 – wow, so many people i know / haven’t talked to in years rip

Mt. sunapee is ~2 hr drive. but due to staffing shortages, a lot of lines…

perhaps yet more youtube to learn to ski? Some advice from friends:

“You just put all your weight on the big toe of your outer ski and you turn. Lift the inner ski slightly so it can rotate and stay parallel.” “If you are learning to ski, no need for a large mountain. McIntyre in Manchester is easy. Looking to get out? Rent snowshoes. Buy microspikes for hiking. Try Mount Cardigan. AMC has facilities there. That was the winter destination from Boston before mechanical lifts”

summary

wow my youtube consumption has increased drastically 0: